This is part 1 of my WebRTC series:

Read Part 2 here: The curious world of web RTC (Part 2: Servers)

I believed webRTC is one of the coolest things that happened to the web. It almost gave web developers super power to do the impossible. With that being said, The standards and implementation is a very different story. In this series of blog I will try to simplify webRTC from all the experience I gathered by building many WebRTC application, facing troubles, and learning along the way.

For those who are completely new to the concept of webRTC, WebRTC is the way to enable real time communication in the browser. The standards, Codecs, and tools are built into the browser itself, so no plugin is needed to do tasks like accessing the webcam, Microphone, Or create peer to peer connection to another browser or device that supports webRTC. The standards of WebRTC is directly picked from similar standards from the telecommunication industry, So integration and interchange is highly possible between different communication medium.

What's Included?

- API to access camera. For desktop computer and laptops, It's the webcam, For phones and tablets it's the device camera. It supports advanced features like preferred camera (in case there are multiple), Video size, bitrate, etc. Not all the features are widely supported. But at this point, most of the devices ship with basic support. The direct feed from the camera can be accessed, what to do with it, depends on the developer 😉

- Ability to access the microphone. Like video, it also supports many options and multiple devices, and device support varies. It can be used to record or transmit audio from the browser.

- Ability to Look up media devices connected to the computer. And check every supported option available.

- Mechanism to create Peer to Peer connection to another browser, or another device that supports same protocol. Once connected, the peer to peer connection can be used to stream video, audio or any arbitrary data, files directly from one computer to another without the need for a server*

- Some browsers support screen capture and other cool (but very limited support) features, that might end up in other browsers soon. But for now, we will talk about the better supported features.

*Server is needed for initial connection establishment and signalling.

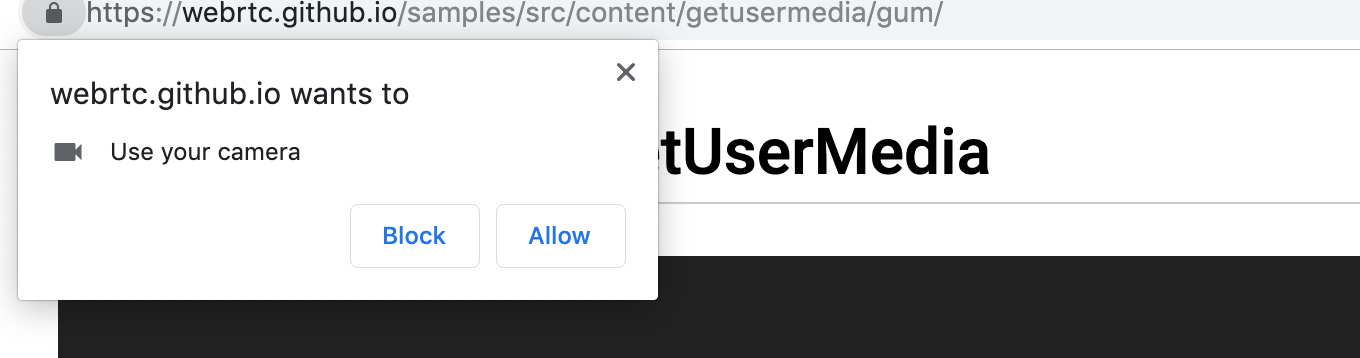

Wait, what? So does that mean any website can watch or hear me at any time?

No. They can't. Until you give permission. WebRTC application requires your permission to access your camera and microphone. Every time any application calls the getUserMedia function (that is used to access camera and microphone), a popup appears asking for permission. You have the option to deny, permanently allow, or allow and let it ask every time it tires to access the hardware.

Ok, now what about security? If a malicious website pretends to be something else and tries to access my camera or microphone? Do I need to encrypt the data before transmission?

The good news is, Only web application with secure origin can utilise webRTC. So any web app that's served from localhost or serverd with a valid SSL certificate are allowed to access webRTC API. WebRTC communication implements DTLS and SRTP protocols. Unless the developer of the web application is intentionally or unintentionally willing to exploit the data, the user is quite safe.

How is this series organised?

This post was the introduction to a series of Posts on webRTC. In these posts we will explore some of the API from webRTC, then talk about the server involvement, including the TURN and STUN servers and explore what it takes to create a signalling server. We will explore webRTC data flow and later will talk about data channels.

If anything else needs to be discussed or I missed something, please let me know in the comments.